💡 Key insights

- Content moderation is the process of reviewing and managing user content to keep platforms safe and legally compliant.

- Most platforms use a mix of automation and human review so they can handle large volumes of posts accurately.

- Strong content moderation reduces harmful material such as hate speech, bullying and misinformation.

- Clear rules and consistent enforcement are essential because moderation must balance user safety with freedom of expression.

Recently, social media platforms have been criticised (more than usual) for the slowness of their content moderation systems. The ACCC’s ongoing inquiry reflects these concerns, highlighting a need for regulation.

This article discusses how social media platforms moderate their content and considers whether such measures are effective.

Current State of Social Media Regulation

The ACCC’s preliminary report discusses social media’s increasingly similar functions to media businesses; they no longer distribute content and instead select and curate it.

However, ‘virtually no media regulation applies to digital platforms’, leaving social media platforms to regulate their content themselves.

How Do Social Media Platforms Moderate Content?

Social media platforms typically employ a combination of manual and automated processes to moderate content. For example, YouTube flags content via:

- machine learning, which flags any videos which fall within its algorithm;

- hashing, which flags reuploads by tracking unique identifiers in removed content; and

- manual flagging by employees, NGOs, government agencies and users.

A YouTube employee then reviews the flagged video and has the final decision in removing it.

Issues in Content Moderation

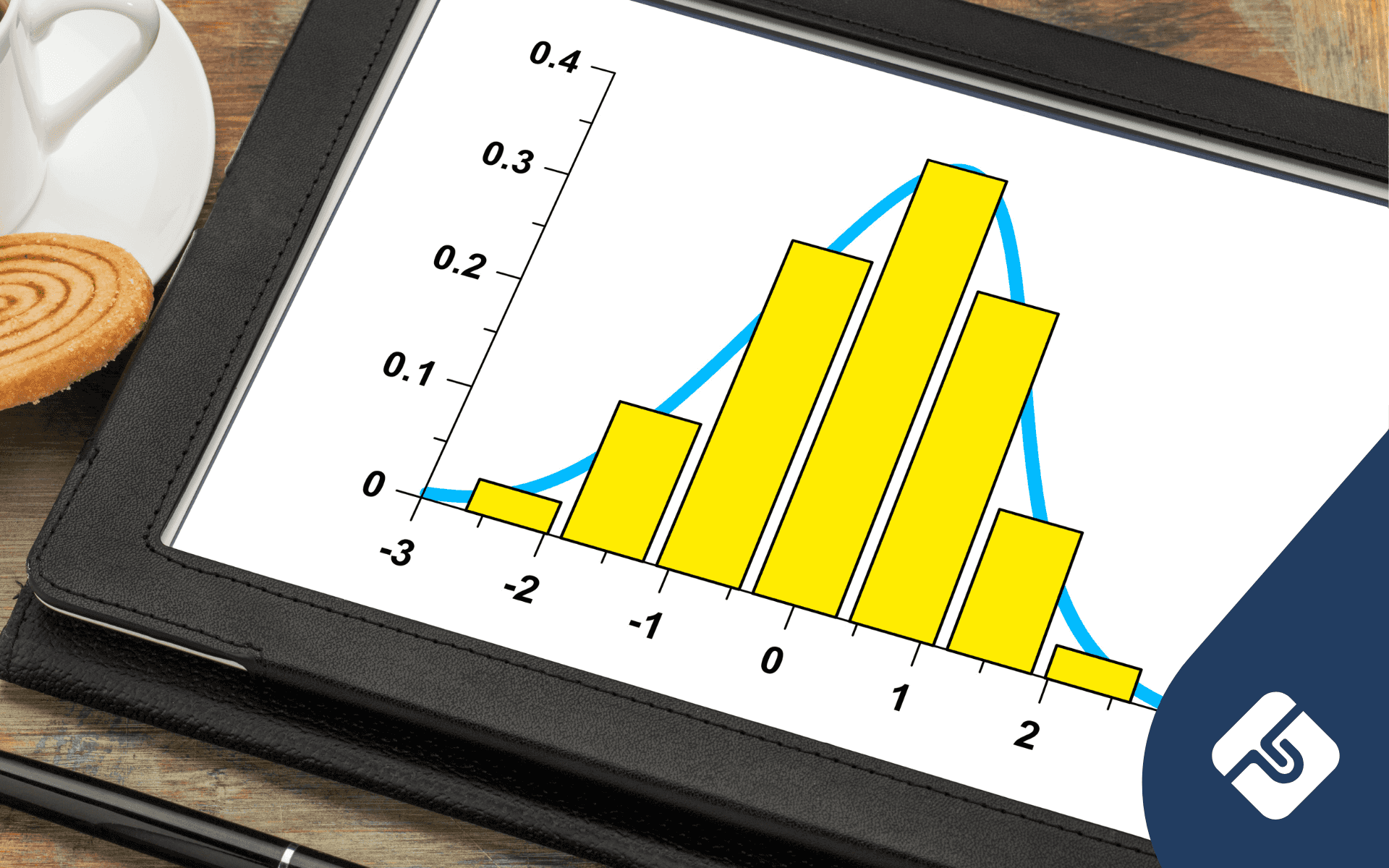

Each flagging method has its own benefits and risks. Machine learning is efficient but applies an indiscriminate algorithm, manual flagging lacks the speed and humanpower to keep up with streams of new content, and hashing is limited to already identified content.

As a result, machine learning and hashing are better at moderating content that fits defined algorithms, such as spam, copyrighted material and flagged content that has been reuploaded.

How then do social media sites efficiently and effectively moderate new or original content?

User Reporting

Platforms rely on users to report new or original content that violates community guidelines. In theory, user reporting compensates for the limited resources in manual flagging and allows flagging and removal to also align with a public interest.

Facebook’s justification for slowness to remove troubling content from their platform was that the content was not reported in a fast enough time to shut it down before it could be widely circulated.

Facebook’s User Reporting System

To report any content on Facebook you need to provide feedback, of which the subtext indicates that you ‘can also report the post after giving feedback’. This subtext was not visible when viewing content directly on a page or profile.

Furthermore, a survey by the ACCC found that six percent of people who experienced mistaken/inaccurate news articles reported it. The reasons for not reporting include:

- not realising complaints were an option;

- being too busy; and

- not thinking a complaint would accomplish much.

The process of reporting requires submitting feedback before taking the time to fill out a detailed form which you may not think will accomplish much. Social media platforms don’t appear to provide much incentive to users to report.

Slow Takedown Time

The difficulties of content moderation processes and reliance on user reporting culminate in a slow process of content takedowns.

Slow processes may allow harmful content to spread, which may result in desperate measures being taken. Major internet providers blocking access to sites and platforms widening their algorithms to automatically takedown more content.

More Moderation or Regulation?

The ACCC calls for regulating takedown procedures under mandatory standards to ‘incentivise the compliance’ with ‘meaningful sanctions’ and ‘enforcement’.

While such regulation may incentivise compliance, they do not appear to solve the problem:

- upholding sanctions against large overseas companies presents jurisdictional challenges;

- it’s unclear what standards could be applied; and

- the challenges in content moderation make it difficult to determine a solution to slow takedown times.

Adding an unclear legal interest to the already conflicting interests of protecting users and encouraging free expression may further complicate a platform’s obligations.

That’s not to say that social media should be entirely unregulated. Rather, regulation should be clear in its requirements of compliance.

The way to comply with regulations on takedown times appears to be to widen the scope of machine learning algorithms, which has already caused a major disruption in the careers of YouTube creators. Conceding control to an algorithm leaves a large room for error.

Conclusion

Social media platforms moderate their content through a number of processes, however, the flaws in self-regulation highlight the need for change.

The ACCC recommends such change takes place in the form of regulation. However, it’s uncertain how the difficulties in content moderation could be navigated to achieve such change.

Asking social media platforms to moderate their content more may be difficult without knowing how or what the effect may be.

Unsure where to start? Contact a LawPath consultant on 1800 529 728 to learn more about customising legal documents and obtaining a fixed-fee quote from Australia’s largest legal marketplace.